ConceptNet’s strong performance at SemEval 2018

At the beginning of June, we went to the NAACL conference and the SemEval workshop. SemEval is a yearly event where NLP systems are compared head-to-head on semantic tasks, and how they perform on unseen test data.

I like to submit to SemEval because I see it as the NLP equivalent of pre-registered studies. You know the results are real; they’re not cherry-picked positive results, and they’re not repeatedly tuned to the same test set. SemEval provides valuable evidence about which semantic techniques actually work well on new data.

Recently, SemEval has been a compelling demonstration of why ConceptNet is important in semantics. The results of multiple tasks have shown the advantage of using a knowledge graph, particularly ConceptNet, and not assuming that a distributional representation such as word2vec will learn everything there is to learn.

Last year we got the top score (by a wide margin) in the SemEval task that we entered using ConceptNet Numberbatch (pre-trained word vectors built from ConceptNet). I was wondering if we had really made an impression with this result, or if the field was going to write it off as a fluke and go on as it was.

We made an impression! This year at SemEval, there were many systems using ConceptNet, not just ours. Let’s look at the two tasks where ConceptNet made an appearance.

Story understanding¶

Task 11: Machine Comprehension Using Commonsense Knowledge is a task where your NLP system reads a story and then answers some simple questions that test its comprehension.

There are many NLP evaluations that involve reading comprehension, but many of them are susceptible to shallow strategies where the machine just learns to parrot key phrases from the text. The interesting twist in this one is that about half of the answers are not present in the text, but are meant to be inferred using common sense knowledge.

Here’s an example from the task paper, by Simon Ostermann et al.:

Text: It was a long day at work and I decided to stop at the gym before going home. I ran on the treadmill and lifted some weights. I decided I would also swim a few laps in the pool. Once I was done working out, I went in the locker room and stripped down and wrapped myself in a towel. I went into the sauna and turned on the heat. I let it get nice and steamy. I sat down and relaxed. I let my mind think about nothing but peaceful, happy thoughts. I stayed in there for only about ten minutes because it was so hot and steamy. When I got out, I turned the sauna off to save energy and took a cool shower. I got out of the shower and dried off. After that, I put on my extra set of clean clothes I brought with me, and got in my car and drove home.

Q1: Where did they sit inside the sauna?

(a) on the floor

(b) on a benchQ2: How long did they stay in the sauna?

(a) about ten minutes

(b) over thirty minutes

Q1 is not just asking for a phrase to be echoed from the text. It requires some common sense knowledge, such as that saunas contain benches, that benches are meant for people to sit on, and that people will probably sit on a bench in preference to the floor.

It’s no wonder that the top system, from Yuanfudao Research, made use of ConceptNet and got a boost from its common sense knowledge. Their architecture was an interesting one I haven’t seen before — they queried the ConceptNet API for what relations existed between words in the text, the question, and the answer, and used the results they got as inputs to their neural net.

I hadn’t heard about this system before the workshop. It was quite satisfying to see ConceptNet win at a difficult task without any effort from us!

Telling word meanings apart¶

Our entry this year was for Task 10: Capturing Discriminative Attributes, a task about recognizing differences between words. Many evaluation tasks, including the multilingual similarity task that we won last year, involve recognizing similar words. For example, it’s good for a system to know that “cappuccino” and “espresso” are similar things. But it’s also important for a system to know how they differ, and that’s what this task is about.

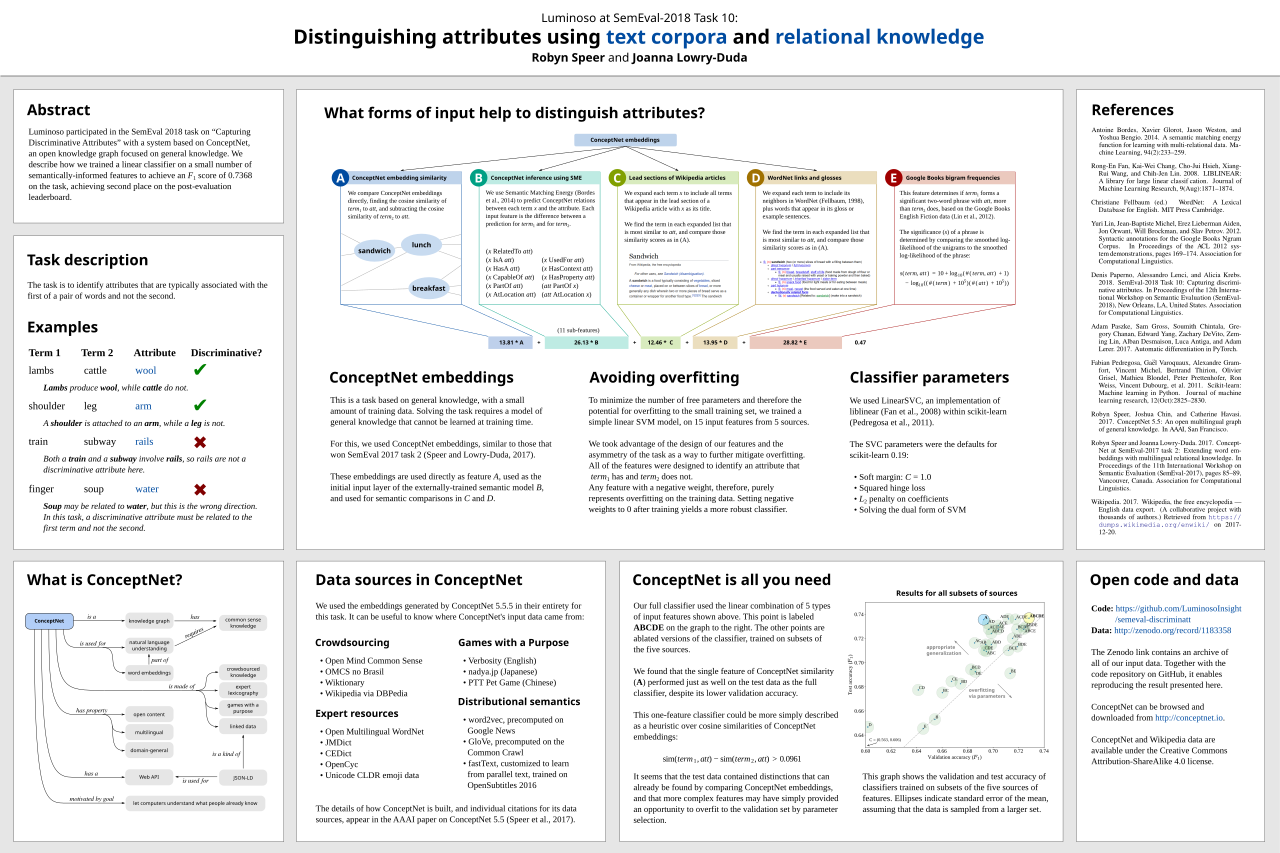

Our entry used ConceptNet Numberbatch in combination with four other resources, and took second place at the task. Our system is best described by our poster, which you can now read from the comfort of your Web browser.

In their summary paper, the task organizers (Alicia Krebs, Alessandro Lenci, and Denis Paperno) highlight the fact that systems that used knowledge bases performed much better than those that didn’t. Here’s a table of the results, which we’ve adapted from their paper and annotated with the largest knowledge base used by each entry:

| Rank | Team | Score | Knowledge base |

|---|---|---|---|

| 1 | SUNNYNLP | 0.75 | Probase |

| 2 | Luminoso | 0.74 | ConceptNet |

| 3 | BomJi | 0.73 | |

| 3 | NTU NLP | 0.73 | ConceptNet |

| 5 | UWB | 0.72 | ConceptNet |

| 6 | ELiRF-UPV | 0.69 | ConceptNet |

| 6 | Meaning Space | 0.69 | WordNet |

| 6 | Wolves | 0.69 | ConceptNet |

| 9 | Discriminator | 0.67 | |

| 9 | ECNU | 0.67 | WordNet |

| 11 | AmritaNLP | 0.66 | |

| 12 | GHH | 0.65 | |

| 13 | ALB | 0.63 | |

| 13 | CitiusNLP | 0.63 | |

| 13 | THU NGN | 0.63 | |

| 16 | UNBNLP | 0.61 | WordNet |

| 17 | UNAM | 0.60 | |

| 17 | UMD | 0.60 | |

| 19 | ABDN | 0.52 | WordNet |

| 20 | Igevorse | 0.51 | |

| 21 | bicici | 0.47 | |

| human ceiling | 0.90 | ||

| word2vec baseline | 0.61 |

The winning system made very effective use of Probase, a hierarchy of automatically extracted “is-a” statements about noun phrases. Unfortunately, Probase was never released for non-academic use; it became the Microsoft Concept Graph, which was recently shut down.

We can see here that five systems used ConceptNet in their solution, and their various papers describe how ConceptNet provided a boost to their accuracy.

In our own results, we encountered the surprising retrospective result that we could have simplified our system to just use the ConceptNet Numberbatch embeddings, and no other sources of information, and it would have done just as well! You can read a bit more about this in the poster, and I hope to demonstrate this simple system in a tutorial post soon.

Comments

Comments powered by Disqus